“Fake news” is currently a hot topic in, well, the news.

- We Tracked Down A Fake-News Creator In The Suburbs (NPR)

- Fake News and the Internet Shell Game (NYT)

- Trump’s fake-news presidency (WaPo)

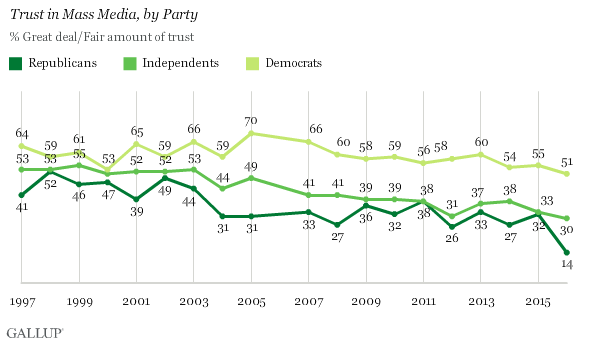

At the same time as fake news is suddenly the cause du jour, legacy media is trusted at an all time low. Funny how that works.

Part of the reason for this decline is that most legacy media have discarded any pretense at being neutral. As Scott Greenfield writes, the solution to fake news is progressive news:

While the legitimate media may not be inclined to fabricate news from whole cloth, they don’t seem to have much of an issue cherry picking facts and arguments, adding in a heavy dose of spin, to assure that you walk away with the certainty that their agenda is the only true one.

The tweaks that legacy media put on stories used to go unnoticed, but with social media, people can discuss, compare, and contrast varying interpretations of events as they never have before.

People no longer accept what is reported in legacy media at face value, but instead search for varying takes and opinions online. Legacy media doesn’t much care for this. They’re supposed to be the gatekeepers and opinion makers! How dare you not just take what they give you and like it!

Facebook To Blame or To The Rescue?

Many in the legacy media are blaming this trend on Facebook, Twitter, and social media in general. Mark Zuckerberg, the CEO of Facebook, took to the platform to say that Facebook is a technology company, not a media company. He also wrote that it was up to users to decide what news to follow and that it was a“crazy idea” that Facebook influenced the election.

So Facebook wants you to believe the following:

- It’s crazy to think that information on Facebook could influence people’s selection of political candidates.

- Companies should pay Facebook millions of dollars to advertise on their platform because it influences people’s selection of products and services.

If you’re experiencing a bit of cognitive dissonance, I don’t blame you.

Zuckerberg eventually backtracked and said that they would be doing something to combat fake news, issuing a 7 point plan to combat fake news:

- Stronger detection.

- Easy reporting.

- Third party verification.

- Warnings.

- Related articles quality.

- Disrupting fake news economics.

- Listening.

So essentially: spam filtering, relying on people flagging content that they don’t like, algorithmic organization, “respected” fact-checking organizations, and input from legacy media.

Does that really sound better to you?

Oh, and by the way, Facebook recently finished working on a special censorship tool at the request of the Chinese government. Nothing to see here, move along.

The Scunthorpe Problem

The problem with relying on computers and algorithms to filter “offensive” content is that they are often awful at it. They are blunt tools for a delicate and complex issue.

Did you know that Google blocked a town’s local news sites in the past? Or that AOL completely forbade the town from signing up for it’s service? Employees at either company didn’t manually block the town – automated filters and algorithms deemed the town inappropriate.

The small town of Scunthorpe, North Lincolnshire, England has a problematic name. It contains the offensive string of characters “cunt,” and continues to have issues with algorithmic filtering. This is known as the Scunthorpe Problem.

It’s not a one off-problem either. A couple of years ago during a conversion of Tolstoy’s War and Peace to the Nook format, the publisher took the Kindle edition and ran a search and replace for ‘Kindle’ and changed every instance of it in the book to Nook (“It was as if a light had been Nookd in a carved and painted lantern…”). Algorithms screw-ups have been going on for decades.

But even when filters and algorithms do work correctly, should you really trust them?

Control Of Choice Architecture

- What happens when Google begins to auto-complete search queries for you?

- What happens when Twitter gets to decide which hashtags auto-complete?

- What happens when Facebook selects what is trending?

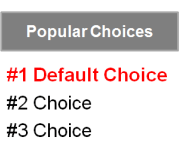

One of the most important and least considered problems in the world right now is choice architecture.

Choice architecture is the design of different ways in which choices can be presented to consumers, and the impact of that presentation on consumer decision-making.

Whether through the coding of an algorithm, or through the influence of human editorial control, something or someone is subtly influencing what information you are exposed to. What auto-completes in a search bar, what is trending on social media, or what shows up on the news.

A great example of the power of choice architecture comes from an anecdote in the book Nudge. It highlights the power and problems of choice architecture.

CALPERS is the gargantuan pension fund for California state teachers. In a study, CALPERS found out that the average number of times that teachers changed their asset allocation (% of stocks, bonds, cash) of their portfolio during their careers . . . .wait for it. . . . was 0 times. Half of the people in the program just went with the default choice.

CALPERS is the gargantuan pension fund for California state teachers. In a study, CALPERS found out that the average number of times that teachers changed their asset allocation (% of stocks, bonds, cash) of their portfolio during their careers . . . .wait for it. . . . was 0 times. Half of the people in the program just went with the default choice.

That means that more than 50% of people in that pension did not ever change their asset allocation. They stuck with their starting generic setting for 15-40 years. They completely relied on the choice of some random person in an human resources department somewhere.

If people don’t take the time to make informed decisions regarding their life savings, what do you think happens when they’re looking at social media or at search results?!

Fake News Thought Police

Zuckerberg, and people in positions of power at social media companies (like Twitter’s Orwellian Trust & Safety Council), are sympathetic to the legacy media that they are replacing. Tech companies are full of forward-thinking, liberal ‘disruptors.’

They like to think of themselves as an enlightened technocratic elite, helping people make better decisions and exposing them to “progressive” opinions. Complete, free-ranging exchange of thoughts and news gets in the way of that. Going back to Greenfield:

Free speech, even if it allows for misinformation, isn’t good enough because it still allows for evil “values” to be promulgated, inconsistent with progressive values which are the only values worthy of spreading. The solution to fake news is to wipe the internet clean of all dissenting views that spread “illiberal” values so that only positive values appear. And then you can chat about it with your friends over a nice chardonnay.

The existence of fake news means that some people won’t adequately vet a story to determine if it’s true or not. Fake news may sow confusion. But what about information that isn’t fake news but marked as “undesirable” by a computer algorithm? What happens when people flag something as fake news, but really it just offends their special snowflake sensibilities?

Is the solution to set up filters and algorithms determining what people should see and hear? All constructed by an anonymous, unelected, technocratic elite?

I’d rather people take personal responsibility for their news consumption. Not just passively receive information, but become informed consumers when it comes to their news sources.

The appropriate solution isn’t to restrict people’s choices, but to help people be aware of all the choices available to them, and then provide them tools to make educated decisions. And this all needs to happen transparently, in public. It doesn’t need to be decided by a clandestine cabal in Silicon Valley.

Using algorithms, legacy media, and partisan reporting to determine that some news, words, or opinions are “problematic” or “fake” smacks of the establishment of a thought police. It’s a knee-jerk, reactionary quick fix to a complex problem. And as H. L. Mencken said:

“For every complex problem there is an answer that is clear, simple, and wrong.”

[divider]

11/30/2016 16:00 UPDATE

Not but 48 hours after I wrote the above, and after the disaster that was Reddit’s CEO editing comments, Reddit has announced that they are now going to do exactly what I described above in order to filter out the sub-reddit r/The_Donald. It’s fun predicting the future.

Remember: while all animals are equal, some are more equal than others.

[divider]

If this made you upset, just imagine what my book would do.